When to Use Repeatable Read Isolation Level

The serializable isolation level provides complete protection from concurrency effects that can threaten information integrity and pb to incorrect query results. Using serializable isolation means that if a transaction that can be shown to produce right results with no concurrent activity, information technology volition keep to perform correctly when competing with whatever combination of concurrent transactions.

This is a very powerful guarantee, and i that probably matches the intuitive transaction isolation expectations of many T-SQL programmers (though in truth, relatively few of these will routinely use serializable isolation in production).

The SQL standard defines 3 additional isolation levels that offer far weaker Acrid isolation guarantees than serializable, in return for potentially college concurrency and fewer potential side-furnishings like blocking, deadlocking, and commit-time aborts.

Unlike serializable isolation, the other isolation levels are defined solely in terms of certain concurrency phenomena that might be observed. The next-strongest of the standard isolation levels afterward serializable is named repeatable read. The SQL standard specifies that transactions at this level permit a single concurrency phenomenon known as a phantom.

Simply as we have previously seen important differences between the mutual intuitive meaning of Acid transaction backdrop and reality, the phantom phenomenon encompasses a wider range of behaviours than is often appreciated.

This post in the series looks at the bodily guarantees provided by the repeatable read isolation level, and shows some of the phantom-related behaviours that tin be encountered. To illustrate some points, we volition refer to the following unproblematic example query, where the unproblematic task is to count the full number of rows in a table:

SELECT COUNT_BIG ( * ) FROM dbo. SomeTable ; Repeatable Read

1 odd thing well-nigh the repeatable read isolation level is information technology does not really guarantee that reads are repeatable, at least in 1 normally-understood sense. This is another example where intuitive meaning alone can be misleading. Executing the same query twice inside the same repeatable read transaction can indeed return different results.

In addition to that, the SQL Server implementation of repeatable read means that a unmarried read of a set of information might miss some rows that logically ought to be considered in the query result. While undeniably implementation-specific, this behaviour is fully in line with the definition of repeatable read contained in the SQL standard.

The final affair I want to annotation rapidly earlier delving into details, is that repeatable read in SQL Server does non provide a point-in-time view of the data.

Non-repeatable Reads

The repeatable read isolation level provides a guarantee that data will not change for the life of the transaction one time it has been read for the first time.

In that location are a couple of subtleties independent in that definition. Outset, it allows data to modify later on the transaction starts simply before the information is first accessed. Second, at that place is no guarantee that the transaction will actually run into all the information that logically qualifies. Nosotros volition encounter examples of both of these shortly.

There is one other preliminary we need to exit of the style quickly, that has to do with the example query nosotros will be using. In fairness, the semantics of this query are a little fuzzy. At the run a risk of sounding slightly philosophical, what does information technology hateful to count the number of rows in the table? Should the result reverberate the state of the table as it was at some detail point in time? Should this indicate in time be the start or cease of the transaction, or something else?

This might seem a bit nit-picky, but the question is a valid 1 in whatever database that supports concurrent data reads and modifications. Executing our example query could take an arbitrarily long length of time (given a large enough table, or resource constraints for instance) so concurrent changes are not only possible, they might be unavoidable.

The key issue here is the potential for the concurrency phenomenon referred to every bit a phantom in the SQL standard. While nosotros are counting rows in the tabular array, some other concurrent transaction might insert new rows in a identify we have already checked, or modify a row we have non checked nevertheless in such a fashion that it moves to a place we take already looked. People frequently think of phantoms as rows that might magically appear when read for a 2nd fourth dimension, in a separate statement, but the effects tin be much more subtle than that.

Concurrent Insert Example

This outset case shows how concurrent inserts can produce a non-repeatable read and/or event in rows beingness skipped. Imagine that our test tabular array initially contains five rows with the values shown below:

We now set the isolation level to repeatable read, starting time a transaction, and run our counting query. As you would wait, the issue is v. No great mystery and then far.

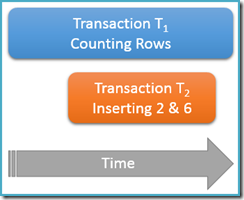

Still executing inside the same repeatable read transaction, we run the counting query over again, merely this fourth dimension while a 2d concurrent transaction is inserting new rows into the aforementioned tabular array. The diagram below shows the sequence of events, with the second transaction calculation rows with values ii and 6 (you might have noticed these values were conspicuous by their absence just above):

If our counting query were running at the serializable isolation level, it would be guaranteed to count either five or seven rows (see the previous article in this serial if you need a refresher on why that is the case). How does running at the less isolated repeatable read level affect things?

Well, repeatable read isolation guarantees that the second run of the counting query will see all the previously-read rows, and they volition be in the same country equally before. The catch is that repeatable read isolation says nothing virtually how the transaction should treat the new rows (the phantoms).

Imagine that our row-counting transaction (Tone) has a physical execution strategy where rows are searched in an ascending alphabetize order. This is a mutual case, for example when a forward-ordered b-tree alphabetize scan is employed by the execution engine. Now, just after transaction T1 counts rows 1 and 3 in ascending guild, transaction T2 might sneak in, insert new rows 2 and 6, and and then commit its transaction.

Though nosotros are primarily thinking of logical behaviours at this point, I should mention that there is null in the SQL Server locking implementation of repeatable read to prevent transaction Tii from doing this. Shared locks taken past transaction T1 on previously-read rows prevent those rows from being inverse, only they do not prevent new rows from being inserted into the range of values tested past our counting query (dissimilar the key-range locks in locking serializable isolation would).

Anyway, with the two new rows committed, transaction T1 continues its ascending-club search, eventually encountering rows 4, 5, 6, and vii. Note that Tane sees new row 6 in this scenario, but not new row two (due to the ordered search, and its position when the insert occurred).

The upshot is that the repeatable read counting query reports that the table contains six rows (values 1, three, four, v, 6 and 7). This result is inconsistent with the previous issue of five rows obtained inside the same transaction. The 2nd read counted phantom row half dozen simply missed phantom row 2. And so much for the intuitive significant of a repeatable read!

Concurrent Update Instance

A similar situation can arise with a concurrent update instead of an insert. Imagine our exam tabular array is reset to incorporate the same five rows as earlier:

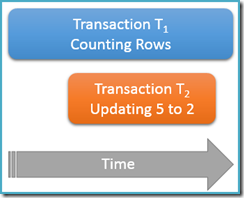

This time, nosotros will only run our counting query one time at the repeatable read isolation level, while a 2nd concurrent transaction updates the row with value five to have a value of two:

Transaction Ti once more starts counting rows, (in ascending order) encountering rows one and 3 first. At present, transaction T2 slips in, changes the value of row 5 to 2 and commits:

I take shown the updated row in the same position as before to make the change clear, just the b-tree index we are scanning maintains the data in logical order, then the real moving-picture show is closer to this:

The indicate is that transaction Tane is meantime scanning this aforementioned structure in forward lodge, beingness currently positioned just after the entry for value iii. The counting query continues scanning forrard from that indicate, finding rows four and 7 (but not row v of course).

To summarize, the counting query saw rows ane, 3, 4, and 7 in this scenario. It reports a count of four rows – which is strange, considering the table seems to have independent five rows throughout!

A second run of the counting query within the same repeatable read transaction would report five rows, for similar reasons as before. Equally a final notation, in case you lot are wondering, concurrent deletions do not provide an opportunity for a phantom-based anomaly under repeatable read isolation.

Final Thoughts

The preceding examples both used ascending-order scans of an index construction to present a simple view of the sort of effects phantoms can have on a repeatable-read query. It is important to sympathize that these illustrations practise not rely in any important style on the scan management or the fact that a b-tree index was used. Delight practice not course the view that ordered scans are somehow responsible and therefore to be avoided!

The same concurrency effects can exist seen with a descending-order scan of an index construction, or in a diversity of other concrete information access scenarios. The broad point is that phantom phenomena are specifically allowed (though not required) by the SQL standard for transactions at the repeatable read level of isolation.

Not all transactions require the complete isolation guarantee provided by serializable isolation, and not many systems could tolerate the side furnishings if they did. Nevertheless, information technology pays to have a good agreement of exactly which guarantees the various isolation levels provide.

Next Time

The next role in this series looks at the even weaker isolation guarantees offered by SQL Server'south default isolation level, read committed.

When to Use Repeatable Read Isolation Level

Source: https://sqlperformance.com/2014/04/t-sql-queries/the-repeatable-read-isolation-level

0 Response to "When to Use Repeatable Read Isolation Level"

Post a Comment